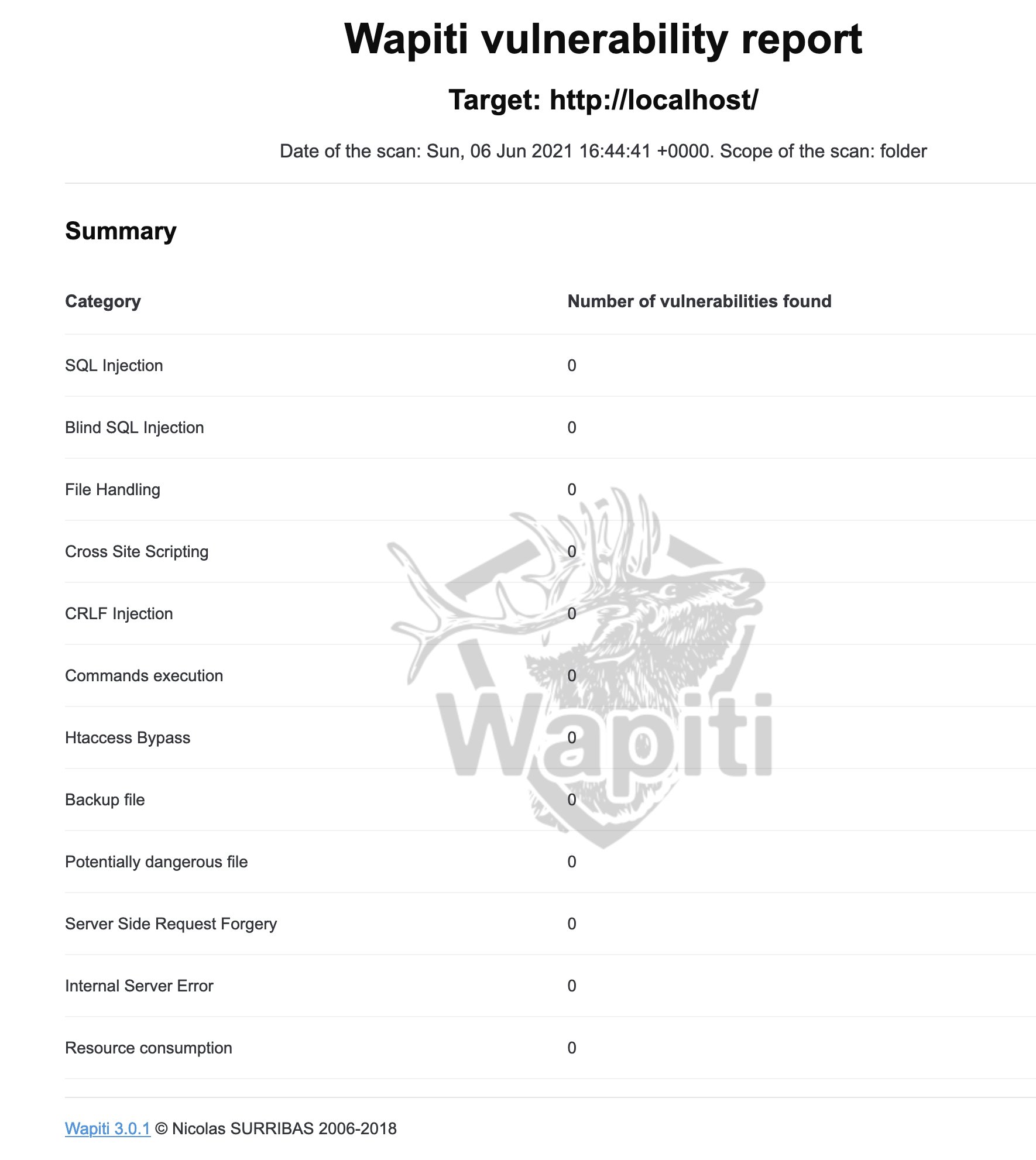

„Black Box Test“ mit Wapiti. Es prüft Webseiten und Web-Applikationen auf Schwachstellen, indem es die Seitenstruktur ermittelt und daraufhin versucht, Daten und Payloads an Skripte und Formulare zu übergeben. Cool, dann mal den eigenen Server überprüfen. Hier schon mal der Ergebnis-Report vorweg:

Installieren mit:

sudo apt-get install wapiti

Check wapiti -h

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 |

wapiti -h Oups! No translations found for your language... Using english. Please send your translations for improvements. =============================================================== ██╗ ██╗ █████╗ ██████╗ ██╗████████╗██╗██████╗ ██║ ██║██╔══██╗██╔══██╗██║╚══██╔══╝██║╚════██╗ ██║ █╗ ██║███████║██████╔╝██║ ██║ ██║ █████╔╝ ██║███╗██║██╔══██║██╔═══╝ ██║ ██║ ██║ ╚═══██╗ ╚███╔███╔╝██║ ██║██║ ██║ ██║ ██║██████╔╝ ╚══╝╚══╝ ╚═╝ ╚═╝╚═╝ ╚═╝ ╚═╝ ╚═╝╚═════╝ Wapiti-3.0.1 (wapiti.sourceforge.net) usage: wapiti [-h] [-u URL] [--scope {page,folder,domain,url}] [-m MODULES_LIST] [--list-modules] [-l LEVEL] [-p PROXY_URL] [-a CREDENTIALS] [--auth-type {basic,digest,kerberos,ntlm}] [-c COOKIE_FILE] [--skip-crawl] [--resume-crawl] [--flush-attacks] [--flush-session] [-s URL] [-x URL] [-r PARAMETER] [--skip PARAMETER] [-d DEPTH] [--max-links-per-page MAX] [--max-files-per-dir MAX] [--max-scan-time MINUTES] [--max-parameters MAX] [-S FORCE] [-t SECONDS] [-H HEADER] [-A AGENT] [--verify-ssl {0,1}] [--color] [-v LEVEL] [-f FORMAT] [-o OUPUT_PATH] [--no-bugreport] [--version] Wapiti-3.0.1: Web application vulnerability scanner optional arguments: -h, --help show this help message and exit -u URL, --url URL The base URL used to define the scan scope (default scope is folder) --scope {page,folder,domain,url} Set scan scope -m MODULES_LIST, --module MODULES_LIST List of modules to load --list-modules List Wapiti attack modules and exit -l LEVEL, --level LEVEL Set attack level -p PROXY_URL, --proxy PROXY_URL Set the HTTP(S) proxy to use. Supported: http(s) and socks proxies -a CREDENTIALS, --auth-cred CREDENTIALS Set HTTP authentication credentials --auth-type {basic,digest,kerberos,ntlm} Set the authentication type to use -c COOKIE_FILE, --cookie COOKIE_FILE Set a JSON cookie file to use --skip-crawl Don't resume the scanning process, attack URLs scanned during a previous session --resume-crawl Resume the scanning process (if stopped) even if some attacks were previously performed --flush-attacks Flush attack history and vulnerabilities for the current session --flush-session Flush everything that was previously found for this target (crawled URLs, vulns, etc) -s URL, --start URL Adds an url to start scan with -x URL, --exclude URL Adds an url to exclude from the scan -r PARAMETER, --remove PARAMETER Remove this parameter from urls --skip PARAMETER Skip attacking given parameter(s) -d DEPTH, --depth DEPTH Set how deep the scanner should explore the website --max-links-per-page MAX Set how many (in-scope) links the scanner should extract for each page --max-files-per-dir MAX Set how many pages the scanner should explore per directory --max-scan-time MINUTES Set how many minutes you want the scan to last (floats accepted) --max-parameters MAX URLs and forms having more than MAX input parameters will be erased before attack. -S FORCE, --scan-force FORCE Easy way to reduce the number of scanned and attacked URLs. Possible values: paranoid, sneaky, polite, normal, aggressive, insane -t SECONDS, --timeout SECONDS Set timeout for requests -H HEADER, --header HEADER Set a custom header to use for every requests -A AGENT, --user-agent AGENT Set a custom user-agent to use for every requests --verify-ssl {0,1} Set SSL check (default is no check) --color Colorize output -v LEVEL, --verbose LEVEL Set verbosity level (0: quiet, 1: normal, 2: verbose) -f FORMAT, --format FORMAT Set output format. Supported: json, html (default), txt, openvas, vulneranet, xml -o OUPUT_PATH, --output OUPUT_PATH Output file or folder --no-bugreport Don't send automatic bug report when an attack module fails --version Show program's version number and exit |

Dann mal das Programm auf den eigenen Server loslassen:

wapiti -u http://localhost/

Der erzeugten HTML Reports liegen in:

.wapiti/generated_report

ein Beispiel ist oben zu sehen.

Disclaimer: Bitte beachten, dass es illegal und strafbar ist, Hosts ohne schriftliche Genehmigung zu scannen.

Verwenden Sie nikto nicht auf fremde Server an! Sondern verwenden Sie nur den eigenen Server oder VMs für Übungs- und Testzwecke.